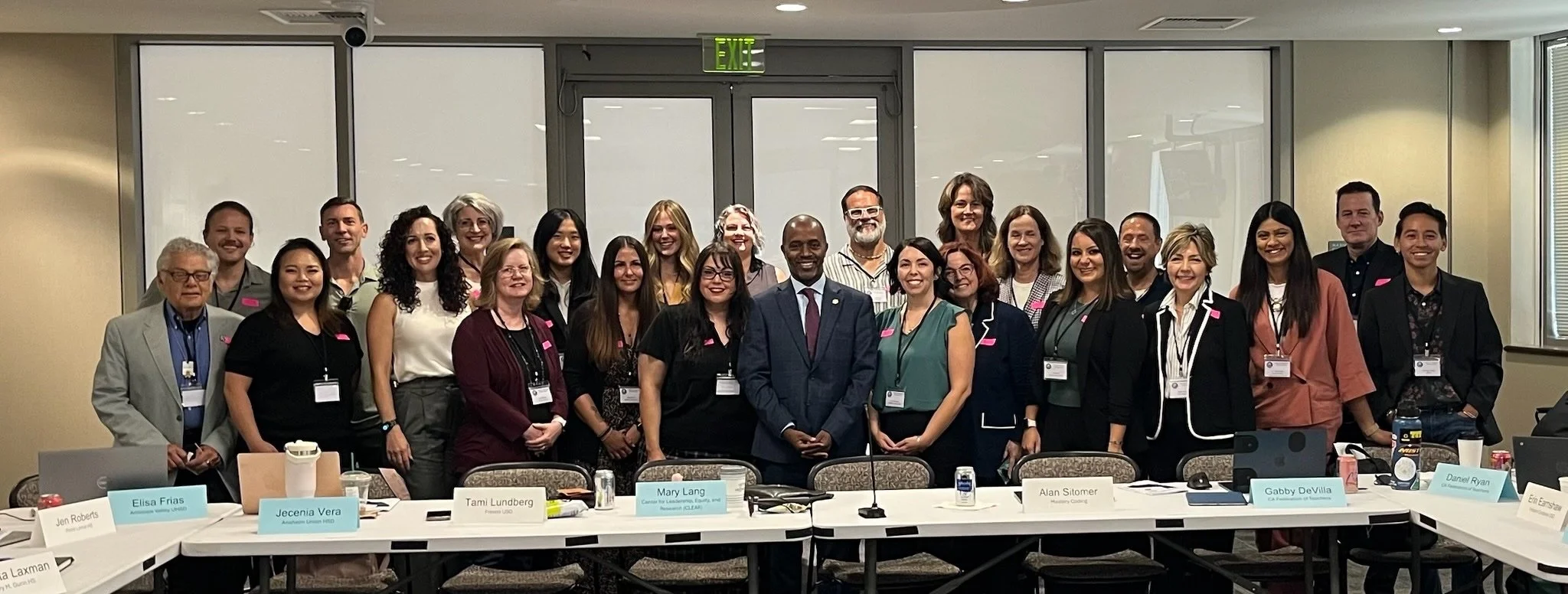

The first meeting of California’s AI in Education Working Group

Last week, aiEDU had the privilege of joining the California AI in Education Working Group’s first public meeting after its establishment under last year’s SB 1288.

What stood out to us most wasn’t the tech talk – it was the people.

The room was full of mostly educators: classroom teachers, librarians, district leaders, higher-ed faculty, and even a few students. You could feel the lived reality of school in every comment, whether it was bell schedules, parent emails, grading stacks, the wins, and the worries.

That energy matters.

If AI is going to help our schools, it has to serve that reality.

What we saw and felt

AI is ubiquitous, so AI literacy has to be systemic.

No one was arguing whether AI is “coming” – it’s here. Students already meet it as a search engine, a study buddy, sometimes even as a therapist.

The task isn’t to gatekeep access; it’s to equip people (students and adults) with the skills and knowledge to use AI critically, ethically, and confidently. That’s AI literacy, and it belongs across the system in policy, pedagogy, professional development, procurement, and parent communication.

Human relationships stay at the center.

Multiple educators said it better than I can: AI should give us back time for the human work like feedback, conferencing, and noticing.

If tools take grading away from teachers but also take them out of the learning loop, we’ve missed the point. Augment, don’t amputate.

The right “order of operations” calms anxiety.

When adoption starts with tools, people brace for whiplash. But when it starts with vision (What are the outcomes for students? What are the goals? What are the guardrails?), then tools can follow.

We heard tangible ways to do this: AI maturity checklists, district literacy plans, and “curiosity corners” where staff can try things without feeling judged.

Student voice rightly pushed us.

The student members were phenomenal. They named both the fears (“AI will take our jobs/art/voice”) and the asks (“Show us how it’s actually useful”). They asked for projects that produce real results where AI accelerates the last mile but doesn’t replace their thinking.

With AI, students want to take on school work that has practical impacts on the world around them. They want school to feel relevant to the future they’ll be living in. That’s a north star.

Professional development should be by educators, for educators, and assessed.

Teachers don’t need another playlist of videos. They need paid time, hands-on protocols, and feedback on actual artifacts (prompts, lesson shells, assessments).

We assess students, and we should also support and assess teacher AI literacy in dignified, growth-oriented ways.

Don’t add standards — integrate them.

One theme we echoed in our breakout session: please don’t bolt a brand new list of AI standards onto an already overloaded system.

Instead, thread AI literacy through existing standards (ELA media literacy, science practices, visual & performing arts, and CTE). Make it measurable where it lives, not in a silo.

Metacognition is the throughline.

A veteran educator reminded us that we must explicitly teach “thinking about thinking.”

AI is fallible. Bias exists. The skill is to interrogate outputs, refine prompts, cross-check sources, and decide when not to use the tool – that’s the muscle we want students and adults to build.

Equity is design, not a disclaimer.

AI can widen gaps if it’s only accessible, legible, or safe for some learners. That’s why the best demos we heard were the ones that incorporated UDL, multilingual support, and special education access into the starting design – not the last paragraph.