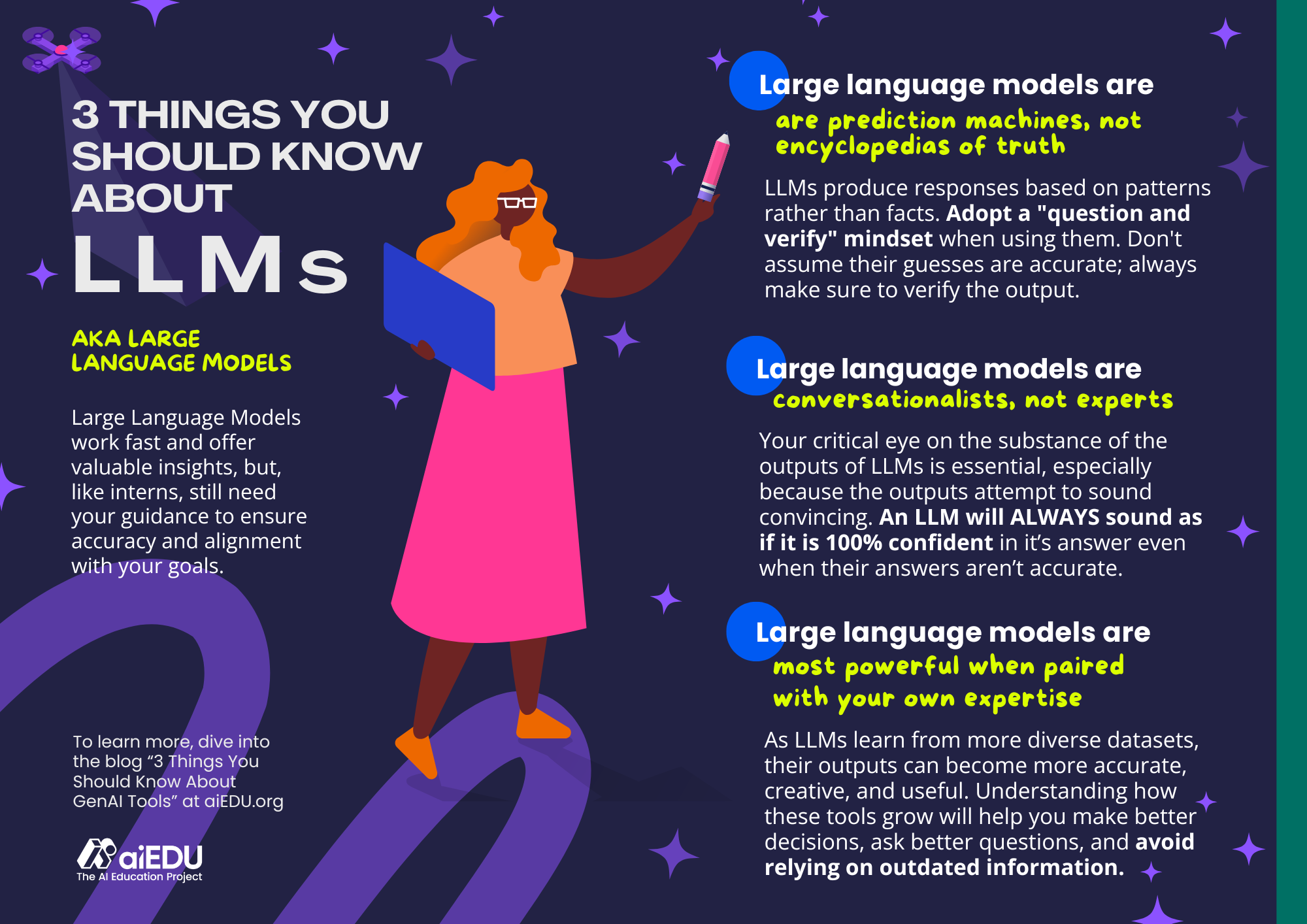

3 things you should know about LLMs

Large Language Models (LLMs) are like eager interns — brimming with ideas, quick to create, but sometimes a little too confident. That’s especially true since interns and LLMs tend to regularly make mistakes! 😆

Specifically, LLMs are the backbone of generative AI apps which use conversational inputs (prompts) to create human-like outputs. (Text, images, video, etc.)

LLMs in the classroom

aiEDU’s Educator Framework emphasizes the importance of knowing and modeling the basics of AI (foundational skills) from the start. Building personal fluency in AI literacy and readiness isn’t just helpful, it is essential for educators.

After all, we can’t expect students to do something if we don’t do it ourselves.

To use LLMs effectively in the classroom, it's essential to understand their strengths and limitations. Before assigning your AI-powered intern its next task (or prompt), here are three key insights to keep in mind:

LLMs are conversationalists, not experts. Using natural language processing and machine learning, LLMs can engage you in conversation, making it feel like you’re chatting with a confident colleague.

But remember that LLMs are like interns, not experts — they aim to impress. Hence why it's essential to review, research, and refine their outputs since they tend to sound highly convincing.LLMs are most powerful when paired with your own expertise. When you're familiar with the subject matter, you can easily guide, refine, and fact-check an LLM’s output.

As an intern, LLMs are depending on YOU to improve! Your existing knowledge helps you ask better questions, provide clearer prompts, and identify inaccuracies quickly. Using LLMs to support your area of expertise ensures they serve as helpful collaborators rather than sources of questionable truth.

LLMs can be powerful tools that offer significant support. However, they require thoughtful oversight and subject competence to evaluate their output. By understanding their limitations you can maximize their value in your practice by leveraging LLM-generated content as a starting point rather than an endpoint.